Mathematics Dictionary

Absolute Value - The absolute value (or modulus) of a real number

is its distance from zero on the real number line, regardless of sign. Formally: Key points:

is always non-negative. - Geometrically,

represents the distance of from on the real line. - In

, this concept generalises to a norm , measuring a vector’s length.

Advanced uses:

- In complex analysis, for

, . - In real analysis, absolute values are critical in defining limits and convergence:

Algebra - Algebra is the branch of mathematics that studies symbols and the rules for manipulating them. It extends basic arithmetic by introducing variables to represent unknown or general quantities.

Scopes of algebra:

- Elementary Algebra:

- Solving linear and quadratic equations

- Factorising polynomials

- Manipulating algebraic expressions

- Abstract Algebra:

- Groups: A set with one operation satisfying closure, associativity, identity, and invertibility

- Rings: A set with two operations (addition, multiplication) generalising integer arithmetic

- Fields: A ring in which every nonzero element has a multiplicative inverse

Example: Solving a linear system:

- We can rewrite this system in matrix form and solve it using methods from linear algebra.

- The matrix representation is:

- Solving

typically involves finding the inverse of (when it exists) or using other factorizations (LU, QR, etc.).

A <- matrix(c(1, 2, 3, -1), nrow=2, byrow=TRUE) b <- c(1, 0) solve(A, b)## [1] 0.1428571 0.4285714Algebra underpins higher mathematics, from geometry (coordinate systems) to analysis (manipulating series expansions) and number theory (factorisation, modular arithmetic).

- Elementary Algebra:

Arithmetic - Arithmetic is the most elementary branch of mathematics, dealing with:

- Addition (

) - Subtraction (

) - Multiplication (

) - Division (

)

These operations extend naturally to concepts like integer factorisation, prime numbers, common divisors, and more.

Core properties:

- Commutative:

and . - Associative:

and . - Distributive:

.

Applications:

- Everyday calculations (e.g. budgeting, measurements)

- Foundation for algebra, number theory, and beyond

- Addition (

Asymptote - An asymptote of a function is a line (or curve) that the function approaches as the input or output grows large in magnitude.

Types:

- Horizontal:

if . - Vertical:

if . - Oblique (Slant):

Example:

- Horizontal asymptote at

- Vertical asymptote at

To analyse numerically in R:

f <- function(x) 1/x # Large values large_x <- seq(100, 1000, by=200) vals_large <- f(large_x) vals_large## [1] 0.010000000 0.003333333 0.002000000 0.001428571 0.001111111# Near x=0 small_x <- seq(-0.1, 0.1, by=0.05) vals_small <- f(small_x) vals_small## [1] -10 -20 Inf 20 10Observe how

- Horizontal:

Angle - An angle is formed by two rays (or line segments) that share a common endpoint, called the vertex. It measures the amount of rotation between these two rays.

Key characteristics:

- Units: Typically measured in degrees (

- Special angles:

- Right angle:

- Straight angle:

- Right angle:

Angle between two vectors

If

- Dot product:

- Norm:

Applications:

- Geometry (e.g. polygons, circles)

- Trigonometry (sine, cosine laws)

- Physics & engineering (rotational motion, phase angles)

- Units: Typically measured in degrees (

Binary Operation - A binary operation on a set

Examples:

- Addition (

- Multiplication (

- Matrix multiplication on square matrices of the same dimension

Properties:

- Associative:

- Commutative:

- Identity: An element

- Inverse: An element

Binary operations form the backbone of algebraic structures (groups, rings, fields) and underpin much of abstract algebra.

- Addition (

Binomial Theorem - The binomial theorem provides a formula to expand expressions of the form

where

Key points:

- It generalises the idea of multiplying out repeated factors of

- The coefficients

- Special cases include:

Applications:

- Algebraic expansions and simplifications

- Combinatorics (counting subsets, paths, etc.)

- Probability (binomial distributions)

- It generalises the idea of multiplying out repeated factors of

Bijection - A bijection (or bijective function) between two sets

- One-to-one (Injective): Different elements in

- Onto (Surjective): Every element of

Formally, a function

- If

- For every

Examples:

- Exponential

Bijective functions are crucial in algebra, combinatorics, and many areas of mathematics because they establish a perfect “pairing” between sets, enabling one-to-one correspondences (e.g., counting arguments in combinatorics).

- One-to-one (Injective): Different elements in

Basis - In linear algebra, a basis of a vector space

- Span

- Are linearly independent: No vector in the set can be written as a linear combination of the others.

If

where

Examples:

- The set

- The set of monomials

Finding a basis is central to problems in linear algebra such as simplifying linear transformations, solving systems of equations, and diagonalising matrices.

- Span

Boundary - In topology (or geometric contexts), the boundary of a set

Formally, the boundary of

where

Examples:

- In

- In

Boundaries are key in analysis (defining open/closed sets) and in geometry (curves, surfaces).

- In

Calculus - Calculus is the branch of mathematics that deals with continuous change. It is traditionally divided into two main parts:

- Differential Calculus: Concerned with rates of change and slopes of curves.

- Integral Calculus: Focuses on accumulation of quantities, areas under curves, etc.

Core concepts:

- Limit:

- Derivative:

- Integral:

Calculus is foundational in physics, engineering, economics, statistics, and many other fields.

Chain Rule - In differential calculus, the chain rule provides a way to compute the derivative of a composite function. If

Key points:

- It generalises the idea that the rate of change of a composition depends on the rate of change of the outer function evaluated at the inner function, multiplied by the rate of change of the inner function itself.

- It appears frequently in problems involving functions of functions, e.g. if

Example:

- If

- Thus,

Curl - In vector calculus, the curl of a 3D vector field

Key points:

- If curl = 0, the field is irrotational (conservative, under certain conditions).

- Vital in fluid flow, electromagnetics (e.g., Maxwell’s equations).

R demonstration (approx numeric partials for a simple field):

library(data.table) F <- function(x,y,z) c(x*y, y+z, x-z) # example h <- 1e-6 curl_approx <- function(f, x,y,z, h=1e-6) { # f => c(Fx, Fy, Fz) # partial wrt x Fx0 <- f(x,y,z) Fx_xph <- f(x+h,y,z); Fx_ymh <- f(x,y-h,z); Fx_zmh <- f(x,y,z-h) # We'll do partial derivatives in the standard determinant sense: # (∂Fz/∂y - ∂Fy/∂z, ∂Fx/∂z - ∂Fz/∂x, ∂Fy/∂x - ∂Fx/∂y) # approximate them Fz_yph <- f(x, y+h, z)[3] Fy_zph <- f(x, y, z+h)[2] Fy_xph <- f(x+h,y,z)[2] Fx_zph <- f(x,y,z+h)[1] Fz_xph <- f(x+h,y,z)[3] Fx_yph <- f(x,y+h,z)[1] c( (Fz_yph - Fx0[3]) / h - (Fy_zph - Fx0[2]) / h, (Fx_zph - Fx0[1]) / h - (Fz_xph - Fx0[3]) / h, (Fy_xph - Fx0[2]) / h - (Fx_yph - Fx0[1]) / h ) } curl_approx(F,1,2,3)## [1] -1 -1 -1Combination - In combinatorics, a combination is a way of selecting items from a collection, such that (unlike permutations) order does not matter.

- The number of ways to choose

Key points:

- Combinations are used in probability, counting arguments, and binomial expansions.

Example:

- Choosing 3 team members from 10 candidates is

- The number of ways to choose

Cardinality - In set theory, cardinality is a measure of the “number of elements” in a set. For finite sets, cardinality matches the usual concept of counting elements. For infinite sets, cardinalities compare the sizes of infinite sets via bijections.

Examples:

- The set

- The set of even integers has the same cardinality as the set of all integers (

- The real numbers have a strictly larger cardinality than the integers (uncountable infinity).

Cardinality helps classify and understand different types of infinities and is fundamental to understanding set-theoretic properties, such as countability vs. uncountability.

- The set

Covariance - In statistics and probability theory, covariance measures the joint variability of two random variables

Key observations:

- If

- If one tends to increase when the other decreases, covariance is negative.

- A covariance of zero does not necessarily imply independence (unless under specific conditions, like normality).

Example in R:

set.seed(123) X <- rnorm(10, mean=5, sd=2) Y <- rnorm(10, mean=7, sd=3) cov(X, Y)## [1] 3.431373Covariance forms the basis of correlation (a normalised version of covariance) and is central in statistics (e.g., linear regression, portfolio variance in finance).

- If

Derivative - In calculus, the derivative of a function

Formally, the derivative

Key points:

- Geometric interpretation: The slope of the tangent line to

- Practical interpretation: Instantaneous rate of change (e.g. velocity from position).

Simple R demonstration (numerical approximation):

# We'll approximate the derivative of f(x) = x^2 at x=2 using a small h f <- function(x) x^2 numeric_derivative <- function(f, a, h = 1e-5) { (f(a + h) - f(a)) / h } approx_deriv_2 <- numeric_derivative(f, 2) actual_deriv_2 <- 2 * 2 # derivative of x^2 is 2x, so at x=2 it's 4 approx_deriv_2## [1] 4.00001actual_deriv_2## [1] 4We see that

- Geometric interpretation: The slope of the tangent line to

Divergence - In vector calculus, the divergence of a vector field

Key points:

- If divergence is zero everywhere, the field is solenoidal (incompressible).

- Common in fluid dynamics, electromagnetics, etc.

R demonstration (approx numeric partials of a simple 3D field):

library(data.table) F <- function(x,y,z) c(x*y, x+z, y*z) # example vector field h <- 1e-6 divergence_approx <- function(f, x,y,z, h=1e-6) { # f => returns c(Fx, Fy, Fz) # partial wrt x fx_plus <- f(x+h,y,z); fx <- f(x,y,z) dFx_dx <- (fx_plus[1] - fx[1]) / h # partial wrt y fy_plus <- f(x,y+h,z) dFy_dy <- (fy_plus[2] - fx[2]) / h # partial wrt z fz_plus <- f(x,y,z+h) dFz_dz <- (fz_plus[3] - fx[3]) / h dFx_dx + dFy_dy + dFz_dz } divergence_approx(F, 1,2,3)## [1] 4Dimension - Dimension generally refers to the number of coordinates needed to specify a point in a space:

- In geometry, 2D refers to a plane, 3D to space, etc.

- In linear algebra, dimension is the cardinality of a basis for a vector space.

- In data science, dimension often describes the number of features or columns in a dataset.

Linear algebra perspective: If

R demonstration (showing dimension of a data.table):

library(data.table) dt_dim <- data.table( colA = rnorm(5), colB = rnorm(5), colC = rnorm(5) ) # Number of rows nrow(dt_dim)## [1] 5# Number of columns (dimension in the sense of data features) ncol(dt_dim)## [1] 3We have a 5 × 3 data.table, so we can say it has 3 “features” or columns in that sense, but in linear algebra, dimension has a more formal meaning related to basis and span.

Determinant - For a square matrix

- Geometrically, for a 2D matrix, the absolute value of the determinant gives the area scaling factor of the linear transformation represented by

For a 2×2 matrix:

Example in R:

library(data.table) # We'll create a small data.table of matrix elements dt <- data.table( a = 2, b = 1, c = 1, d = 3 ) # Convert dt to a matrix A <- matrix(c(dt$a, dt$b, dt$c, dt$d), nrow=2, byrow=TRUE) det_A <- det(A) det_A## [1] 5Decision Tree - A decision tree is a model that splits data by features to produce a tree of decisions for classification or regression. Nodes perform tests (e.g.,

Key points:

- For classification, we measure impurity using entropy or Gini index, splitting to maximise information-gain.

- For regression, splits often minimise sum of squared errors in leaves.

R demonstration (using

rpartfor a simple tree):library(rpart) library(rpart.plot) library(data.table) set.seed(123) n <- 50 x1 <- runif(n, min=0, max=5) x2 <- runif(n, min=0, max=5) y_class <- ifelse(x1 + x2 + rnorm(n, sd=1) > 5, "A","B") dt_tree <- data.table(x1=x1, x2=x2, y=y_class) fit_tree <- rpart(y ~ x1 + x2, data=dt_tree, method="class") rpart.plot(fit_tree)

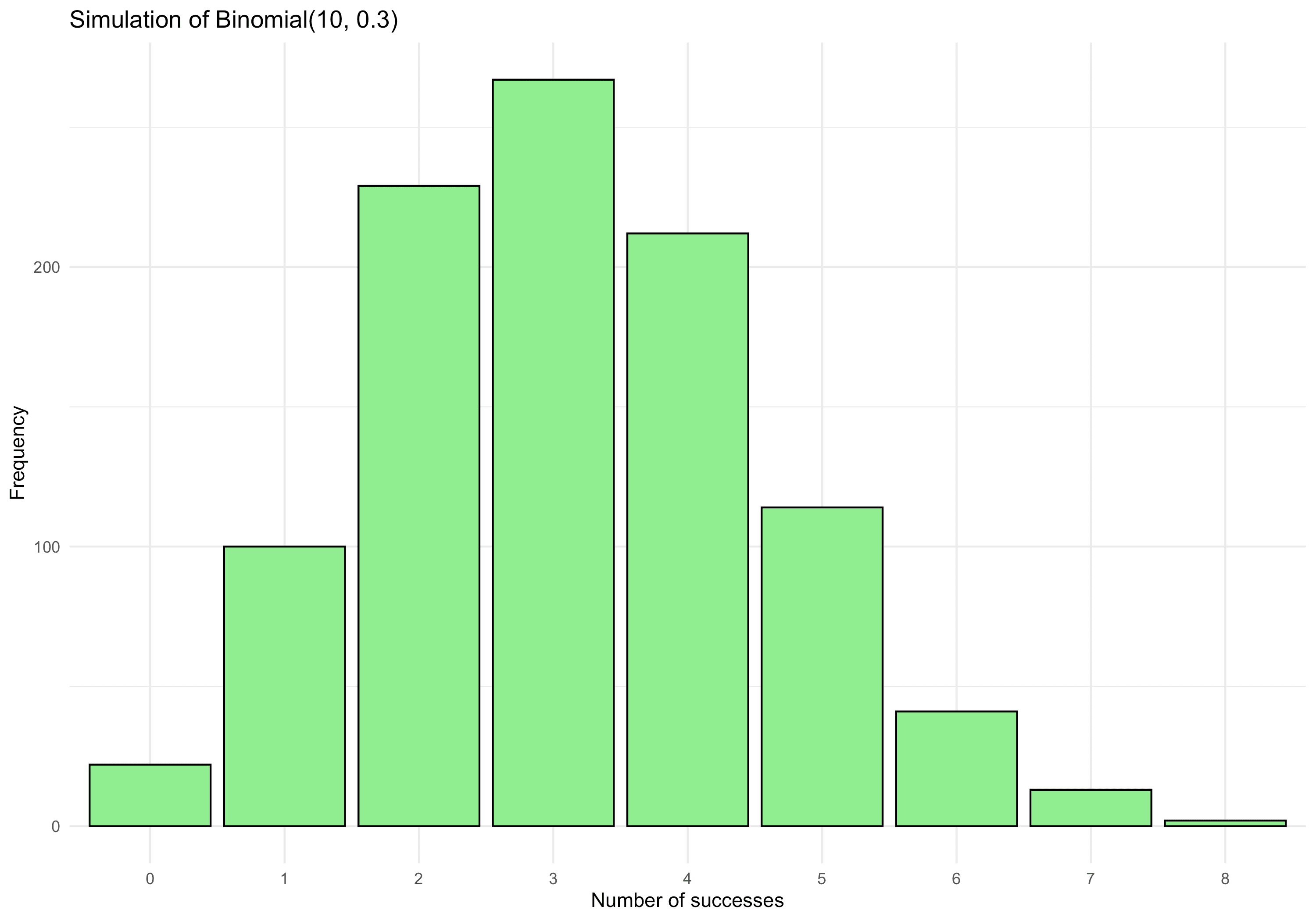

Discrete Random Variable - A discrete random variable is one that takes on a countable set of values (often integers). Typical examples include:

- Number of heads in

- Number of customers arriving at a store in an hour (Poisson process)

Probability Mass Function (pmf) for a discrete random variable

where

R demonstration (creating a binomial discrete variable):

library(data.table) library(ggplot2) # Suppose X ~ Binomial(n=10, p=0.3) n <- 10 p <- 0.3 num_sims <- 1000 # Generate 1000 realisations of X dt_binom <- data.table( X = rbinom(num_sims, size=n, prob=p) ) # Plot distribution ggplot(dt_binom, aes(x=factor(X))) + geom_bar(fill="lightgreen", colour="black") + labs( title="Simulation of Binomial(10, 0.3)", x="Number of successes", y="Frequency" ) + theme_minimal()

- Number of heads in

Distribution - In probability and statistics, a distribution describes how values of a random variable are spread out. It can be specified by a probability density function (pdf) for continuous variables or a probability mass function (pmf) for discrete variables.

Common examples:

- Normal distribution:

- Binomial distribution: Counts successes in

- Poisson distribution: Counts events in a fixed interval with known average rate

R demonstration (sampling from a normal distribution and visualising via ggplot2):

library(data.table) library(ggplot2) # Create a data.table with 1000 random N(0,1) values dt_dist <- data.table( x = rnorm(1000, mean=0, sd=1) ) # Plot a histogram ggplot(dt_dist, aes(x=x)) + geom_histogram(bins=30, colour="black", fill="skyblue") + geom_density(aes(y=..count..), colour="red", size=1) + labs( title="Histogram & Density for N(0,1)", x="Value", y="Count/Density" ) + theme_minimal()## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0. ## ℹ Please use `linewidth` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.## Warning: The dot-dot notation (`..count..`) was deprecated in ggplot2 3.4.0. ## ℹ Please use `after_stat(count)` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.

- Normal distribution:

Ellipse - An ellipse is a curve on a plane, defined as the locus of points where the sum of the distances to two fixed points (foci) is constant.

Standard form (centred at the origin):

where

R demonstration (plotting an ellipse with ggplot2):

library(data.table) library(ggplot2) # Let's parametric form: x = a*cos(t), y = b*sin(t) a <- 3 b <- 2 theta <- seq(0, 2*pi, length.out=200) dt_ellipse <- data.table( x = a*cos(theta), y = b*sin(theta) ) ggplot(dt_ellipse, aes(x=x, y=y)) + geom_path(color="blue", size=1) + coord_fixed() + labs( title="Ellipse with a=3, b=2", x="x", y="y" ) + theme_minimal()## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0. ## ℹ Please use `linewidth` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.

Entropy - In information theory, entropy quantifies the average amount of information contained in a random variable’s possible outcomes. For a discrete random variable

Key points:

- Entropy is maximised when all outcomes are equally likely.

- Low entropy implies outcomes are more predictable.

- It underpins coding theory, compressions, and measures of uncertainty.

R demonstration (computing entropy of a discrete distribution):

library(data.table) entropy_shannon <- function(prob_vec) { # Make sure prob_vec sums to 1 -sum(prob_vec * log2(prob_vec), na.rm=TRUE) } dt_prob <- data.table( outcome = letters[1:4], prob = c(0.1, 0.4, 0.3, 0.2) # must sum to 1 ) H <- entropy_shannon(dt_prob$prob) H## [1] 1.846439Eigenvalue - In linear algebra, an eigenvalue of a square matrix

Key points:

- Eigenvalues reveal important properties of linear transformations (e.g., scaling factors in certain directions).

- If

- The polynomial

R demonstration (finding eigenvalues of a 2x2 matrix):

library(data.table) # Create a data.table for matrix entries dtA <- data.table(a=2, b=1, c=1, d=2) A <- matrix(c(dtA$a, dtA$b, dtA$c, dtA$d), nrow=2, byrow=TRUE) A## [,1] [,2] ## [1,] 2 1 ## [2,] 1 2# Compute eigenvalues using base R eigs <- eigen(A) eigs$values## [1] 3 1eigs$vectors## [,1] [,2] ## [1,] 0.7071068 -0.7071068 ## [2,] 0.7071068 0.7071068Expectation - In probability theory, the expectation (or expected value) of a random variable

For a discrete random variable:

For a continuous random variable:

where

R demonstration (empirical estimation of expectation):

library(data.table) set.seed(123) # Suppose X ~ Uniform(0, 10) X_samples <- runif(10000, min=0, max=10) dtX <- data.table(X = X_samples) # Empirical mean emp_mean <- mean(dtX$X) # Theoretical expectation for Uniform(0, 10) is 5 theoretical <- 5 emp_mean## [1] 4.975494theoretical## [1] 5Field - In abstract algebra, a field is a ring in which every nonzero element has a multiplicative inverse. The real numbers

Key points:

- Both addition and multiplication exist and distribute.

- Every nonzero element is invertible under multiplication.

- Foundation of much of modern mathematics (vector spaces, linear algebra).

No direct R demonstration typical.

cat("Examples: ℚ, ℝ, ℂ all form fields with standard + and *.")## Examples: ℚ, ℝ, ℂ all form fields with standard + and *.Fourier Transform - The Fourier transform is a powerful integral transform that expresses a function of time (or space) as a function of frequency. For a function

Key points:

- Decomposes signals into sums (integrals) of sines and cosines (complex exponentials).

- Essential in signal processing, differential equations, image analysis, etc.

Discrete analogue (DFT) in R demonstration:

library(data.table) library(ggplot2) # Create a time series with two sine waves set.seed(123) n <- 256 t <- seq(0, 2*pi, length.out=n) f1 <- 1 # frequency 1 f2 <- 5 # frequency 5 signal <- sin(f1*t) + 0.5*sin(f2*t) dt_sig <- data.table( t = t, signal = signal ) # Compute discrete Fourier transform # We'll use stats::fft FT <- fft(dt_sig$signal) modulus <- Mod(FT[1:(n/2)]) # we only look at half (Nyquist) dt_dft <- data.table( freq_index = 1:(n/2), amplitude = modulus ) # Plot amplitude ggplot(dt_dft, aes(x=freq_index, y=amplitude)) + geom_line(color="blue") + labs( title="DFT amplitude spectrum", x="Frequency Index", y="Amplitude" ) + theme_minimal()

Function - A function

Key points:

- Each input has exactly one output (well-defined).

- One of the most fundamental concepts in mathematics.

R demonstration (defining a simple function in R):

library(data.table) # A function that squares its input f <- function(x) x^2 dt_fun <- data.table( x = -3:3 ) dt_fun[, f_x := f(x)] dt_fun## x f_x ## <int> <num> ## 1: -3 9 ## 2: -2 4 ## 3: -1 1 ## 4: 0 0 ## 5: 1 1 ## 6: 2 4 ## 7: 3 9Fractal - A fractal is a geometric object that often exhibits self-similarity at various scales. Examples include the Mandelbrot set, Julia sets, and natural phenomena (coastlines, etc.).

Key traits:

- Self-similarity: Zoomed-in portions look similar to the original.

- Fractional dimension: Dimension can be non-integer.

- Often defined recursively or via iterative processes.

R demonstration (a simple iteration for the Koch snowflake boundary length, numerical only):

library(data.table) koch_iterations <- 5 dt_koch <- data.table(step = 0:koch_iterations) # Start with length 1 for the side of an equilateral triangle # Each iteration multiplies the total line length by 4/3 dt_koch[, length := (4/3)^step] dt_koch## step length ## <int> <num> ## 1: 0 1.000000 ## 2: 1 1.333333 ## 3: 2 1.777778 ## 4: 3 2.370370 ## 5: 4 3.160494 ## 6: 5 4.213992Factorial - For a positive integer

By convention,

Key points:

- Factorials grow very quickly (super-exponential growth).

- Central to combinatorics:

- Appears in formulas such as binomial coefficients

R demonstration (illustrating factorial growth):

library(data.table) # Let's build a small data.table of n and n! dt_fact <- data.table( n = 1:6 ) dt_fact[, factorial_n := factorial(n)] dt_fact## n factorial_n ## <int> <num> ## 1: 1 1 ## 2: 2 2 ## 3: 3 6 ## 4: 4 24 ## 5: 5 120 ## 6: 6 720Frequency - Frequency in mathematics and statistics can refer to:

- Statistical frequency: How often a value appears in a dataset.

- Periodic phenomenon: The number of cycles per unit time (e.g., in sine waves, signals).

Statistical frequency:

- Relative frequency = count of event / total observations.

- Frequency table is a basic summary in data analysis.

Periodic frequency (in signals):

- If

R demonstration (calculating frequencies in a categorical dataset):

library(data.table) # Suppose a small categorical variable dt_freq <- data.table( category = c("A", "B", "A", "C", "B", "A", "B", "B", "C") ) # Frequency count freq_table <- dt_freq[, .N, by=category] setnames(freq_table, "N", "count") freq_table[, rel_freq := count / sum(count)] freq_table## category count rel_freq ## <char> <int> <num> ## 1: A 3 0.3333333 ## 2: B 4 0.4444444 ## 3: C 2 0.2222222Group - In abstract algebra, a group is a set

- Closure: For all

- Associativity:

- Identity: There exists

- Inverse: For each

Examples:

- Integers under addition

- Nonzero real numbers under multiplication

- Symmetry groups in geometry

While direct R demonstration is less obvious, one could illustrate a finite group:

library(data.table) # Let's define a small group table: Z2 x Z2 (Klein group), with elements { (0,0), (0,1), (1,0), (1,1) } under addition mod 2 # We'll store all results in a data.table dt_group <- data.table( elem1 = c("(0,0)","(0,0)","(1,0)","(1,0)","(0,1)","(0,1)","(1,1)","(1,1)"), elem2 = c("(0,0)","(1,0)","(0,0)","(1,0)","(0,1)","(1,1)","(0,1)","(1,1)") ) # We'll define a small function that "adds" these pairs mod 2 add_mod2_pairs <- function(a, b) { # parse, e.g. "(1,0)" a_vals <- as.integer(unlist(strsplit(gsub("[()]", "", a), ","))) b_vals <- as.integer(unlist(strsplit(gsub("[()]", "", b), ","))) sum_vals <- (a_vals + b_vals) %% 2 paste0("(", sum_vals[1], ",", sum_vals[2], ")") } dt_group[, result := mapply(add_mod2_pairs, elem1, elem2)] dt_group## elem1 elem2 result ## <char> <char> <char> ## 1: (0,0) (0,0) (0,0) ## 2: (0,0) (1,0) (1,0) ## 3: (1,0) (0,0) (1,0) ## 4: (1,0) (1,0) (0,0) ## 5: (0,1) (0,1) (0,0) ## 6: (0,1) (1,1) (1,0) ## 7: (1,1) (0,1) (1,0) ## 8: (1,1) (1,1) (0,0)This small table demonstrates closure in the group. Associativity, identity, and inverses also hold, though not explicitly shown here.

- Closure: For all

Gradient - In multivariable calculus, the gradient of a scalar field

Interpretation:

- Points in the direction of steepest ascent of

- Magnitude represents the rate of increase in that direction.

R demonstration (numerically approximating a gradient for

library(data.table) f_xy <- function(x, y) x^2 + 2*x*y numeric_grad <- function(f, x, y, h=1e-6) { df_dx <- (f(x+h, y) - f(x, y)) / h df_dy <- (f(x, y+h) - f(x, y)) / h c(df_dx, df_dy) } grad_1_2 <- numeric_grad(f_xy, 1, 2) grad_1_2 # The exact gradient is (2x + 2y, 2x). At (1,2) => (2*1 + 2*2, 2*1) => (6,2)## [1] 6.000001 2.000000- Points in the direction of steepest ascent of

Generating Function - A generating function is a formal power series whose coefficients encode information about a sequence

Key points:

- Used extensively in combinatorics to derive closed forms or recurrences.

- Different types (ordinary, exponential) depending on the combinatorial interpretation.

Example: The ordinary generating function for the sequence

No direct data.table example is typical here, but generating functions are used in discrete math. One might do symbolic manipulations with external packages. We can, however, illustrate partial sums numerically:

library(data.table) x_val <- 0.2 num_terms <- 10 dt_genfun <- data.table( n = 0:(num_terms-1) ) dt_genfun[, term := x_val^n] partial_sum <- dt_genfun[, sum(term)] partial_sum## [1] 1.25# Compare to closed-form 1/(1 - x_val) closed_form <- 1 / (1 - x_val) closed_form## [1] 1.25Graph - In graph theory, a graph is a set of vertices (nodes) connected by edges (links). Formally, a graph

Types:

- Simple vs. multigraph (multiple edges)

- Directed vs. undirected

- Weighted vs. unweighted

Applications:

- Social networks (people as nodes, relationships as edges)

- Transportation (cities as nodes, roads as edges)

- Computer science (data structures, BFS/DFS, shortest paths)

R demonstration (constructing a small graph with igraph):

library(igraph)## ## Attaching package: 'igraph'## The following objects are masked from 'package:stats': ## ## decompose, spectrum## The following object is masked from 'package:base': ## ## union# Create an undirected graph with edges g <- graph(edges=c("A","B", "B","C", "A","C", "C","D"), directed=FALSE)## Warning: `graph()` was deprecated in igraph 2.1.0. ## ℹ Please use `make_graph()` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.plot(g, vertex.color="lightblue", vertex.size=30)

GAN (Generative Adversarial Network) - A GAN consists of two neural networks: a generator

Key points:

- The generator improves to fool the discriminator, while the discriminator improves to detect fakes.

- Commonly used for image synthesis, text generation, etc.

R demonstration (Again, implementing a full GAN in R is nontrivial, but we show a minimal conceptual snippet):

library(data.table) cat("Minimal conceptual code. Usually done with torch or tensorflow in Python. We'll pseudo-code one step.\n")## Minimal conceptual code. Usually done with torch or tensorflow in Python. We'll pseudo-code one step.gen_step <- function(z, G_params) { # fwd pass to produce G(z) # ... # return synthetic data } disc_step <- function(x, D_params) { # fwd pass to produce D(x) # ... # return a probability } # Then update G_params, D_params via gradient cat("GAN training step = minimize log(1 - D(G(z))) wrt G, maximize log D(x) + log(1 - D(G(z))) wrt D.\n")## GAN training step = minimize log(1 - D(G(z))) wrt G, maximize log D(x) + log(1 - D(G(z))) wrt D.Gamma Function - The Gamma function generalises the factorial to complex (and real) arguments. For

Key property:

Key points:

- Extends factorial beyond integers (e.g.,

- Appears often in probability (Gamma distribution) and complex analysis.

R demonstration (Gamma function values):

library(data.table) # We'll evaluate Gamma at some points dt_gamma <- data.table(x = c(0.5, 1, 2, 3, 4)) dt_gamma[, gamma_x := gamma(x)] dt_gamma## x gamma_x ## <num> <num> ## 1: 0.5 1.772454 ## 2: 1.0 1.000000 ## 3: 2.0 1.000000 ## 4: 3.0 2.000000 ## 5: 4.0 6.000000- Extends factorial beyond integers (e.g.,

Homomorphism - In algebra, a homomorphism is a structure-preserving map between two algebraic structures of the same type. For instance, a group homomorphism between groups

such that:

where

This ensures that the algebraic structure (associativity, identity, inverses) is respected.

No direct R demonstration is typical for homomorphisms, but we can quickly illustrate a trivial map:

# As a simple example: # A map from Z -> Z given by phi(x) = 2*x is a group homomorphism under addition. phi <- function(x) 2*x phi(3) # 6## [1] 6# phi(a + b) = 2*(a + b) = 2a + 2b = phi(a) + phi(b)Heaviside Step Function - The Heaviside step function, often denoted

Key points:

- Used in signal processing and differential equations to represent a “switch on” at

- Sometimes defined with

R demonstration:

library(data.table) H <- function(x) ifelse(x<0,0,1) test_x <- seq(-2,2,by=0.5) data.table(x=test_x, H=H(test_x))## x H ## <num> <num> ## 1: -2.0 0 ## 2: -1.5 0 ## 3: -1.0 0 ## 4: -0.5 0 ## 5: 0.0 1 ## 6: 0.5 1 ## 7: 1.0 1 ## 8: 1.5 1 ## 9: 2.0 1- Used in signal processing and differential equations to represent a “switch on” at

Hyperbola - A hyperbola is a conic section formed by the intersection of a plane and a double cone, but at a steeper angle than that of a parabola. In standard form, a hyperbola centered at the origin can be written as:

(for the east-west opening case). Hyperbolas have two branches and characteristic asymptote lines that the hyperbola approaches as

R demonstration (plotting a hyperbola segment in ggplot2):

library(data.table) library(ggplot2) a <- 2 b <- 1 x_vals <- seq(-5, 5, by=0.01) dt_hyp <- data.table( x = x_vals ) # For x^2/a^2 - y^2/b^2 = 1 => y^2 = (x^2/a^2 - 1)*b^2 # We only plot real solutions (where x^2/a^2 > 1) dt_hyp[, y_pos := ifelse(abs(x/a) > 1, b*sqrt((x^2/a^2) - 1), NA)]## Warning in sqrt((x^2/a^2) - 1): NaNs produceddt_hyp[, y_neg := ifelse(abs(x/a) > 1, -b*sqrt((x^2/a^2) - 1), NA)]## Warning in sqrt((x^2/a^2) - 1): NaNs producedggplot() + geom_line(aes(x=x, y=y_pos), data=dt_hyp, color="blue") + geom_line(aes(x=x, y=y_neg), data=dt_hyp, color="blue") + coord_fixed() + labs( title="Hyperbola for x^2/4 - y^2/1 = 1", x="x", y="y" ) + theme_minimal()

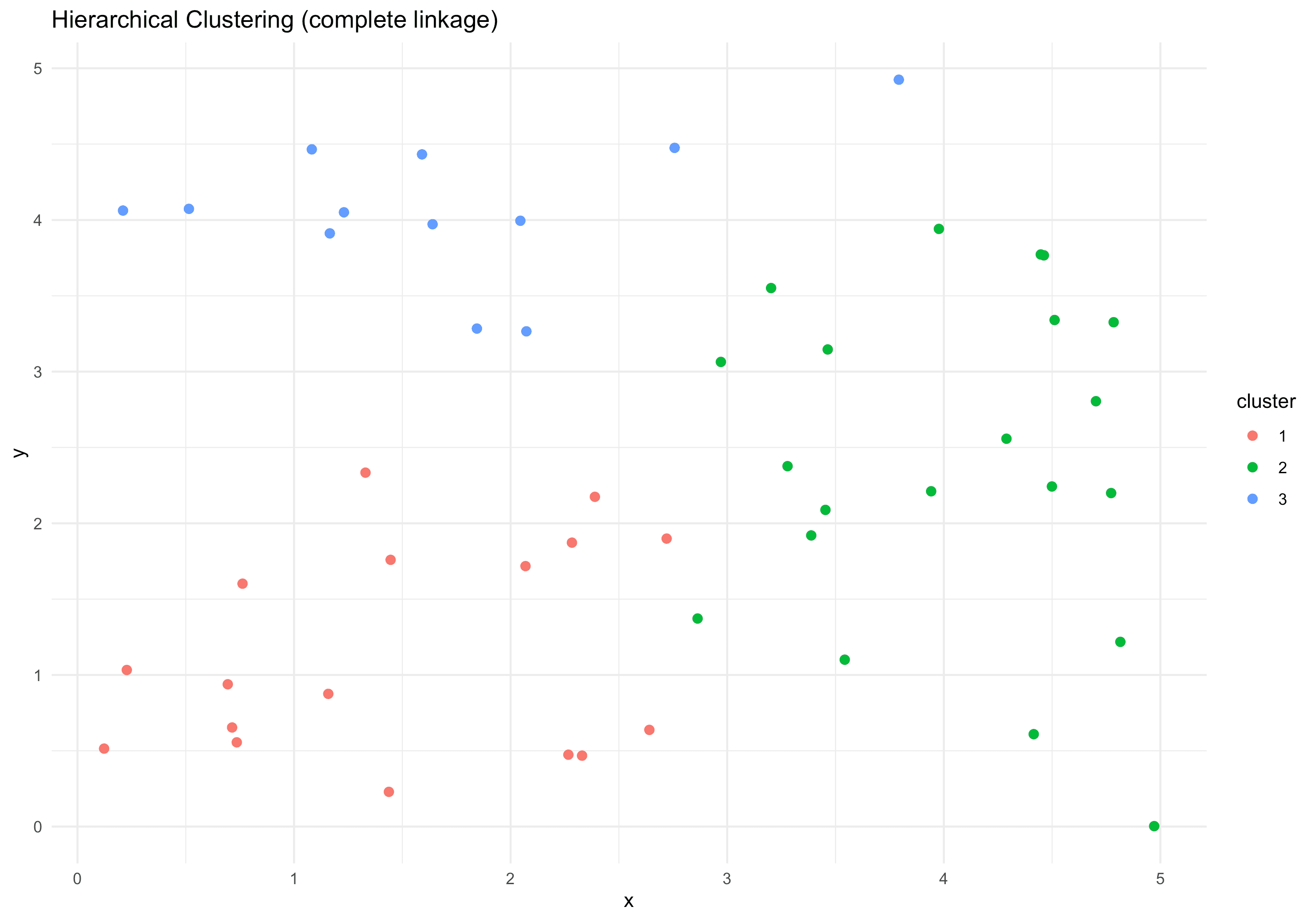

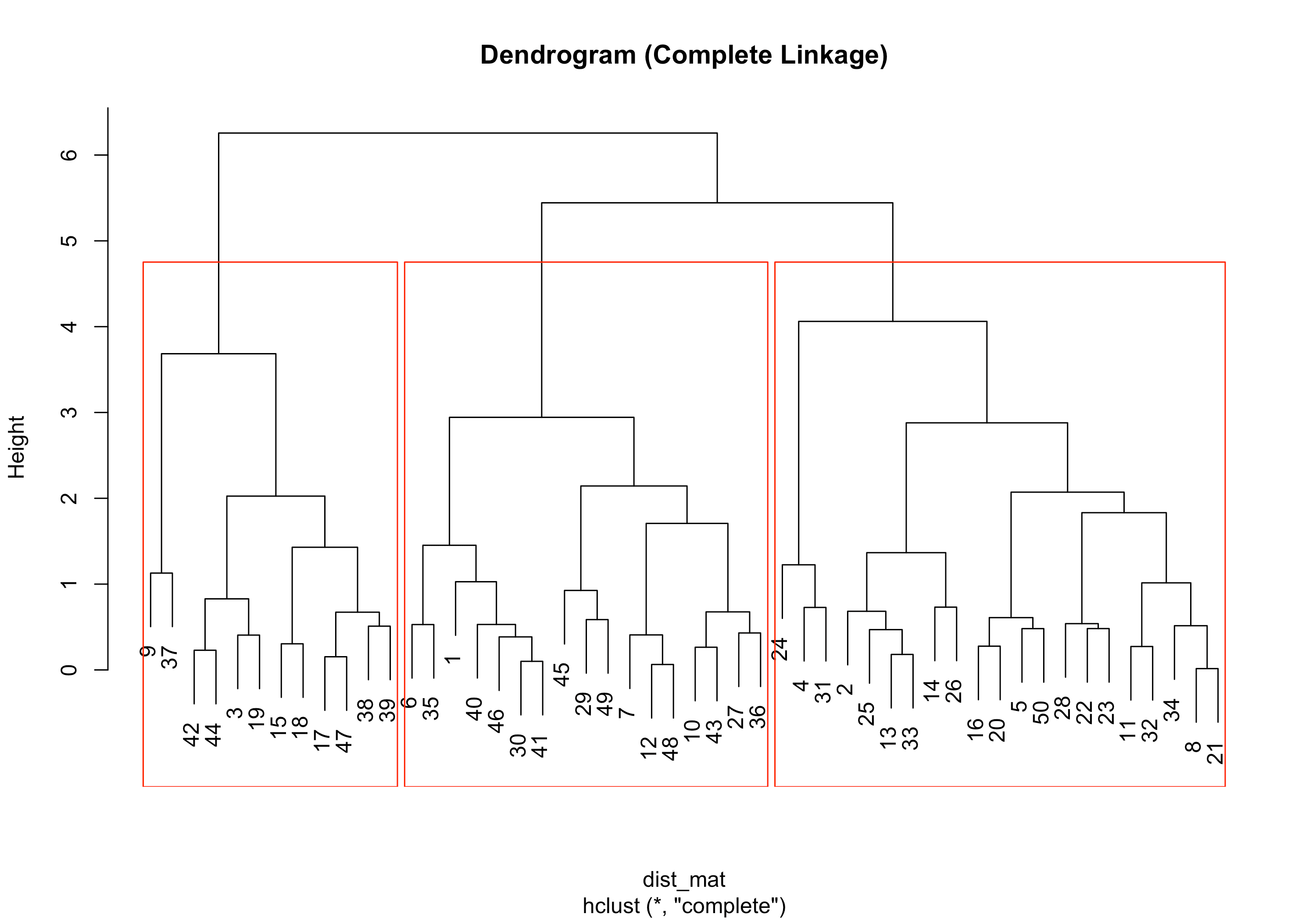

Hierarchical Clustering - A clustering method that builds a hierarchy of clusters either bottom-up (agglomerative) or top-down (divisive). Distances between clusters can be defined by single, complete, average linkage, etc. A dendrogram shows the merge/split hierarchy.

Algorithm (agglomerative):

- Start with each point as its own cluster.

- Merge clusters pairwise based on smallest distance until one cluster remains.

Distance metrics:

- Single linkage:

- Complete linkage:

R demonstration (using

hcluston 2D data):library(data.table) library(ggplot2) set.seed(123) n <- 50 x <- runif(n,0,5) y <- runif(n,0,5) dt_hc <- data.table(x,y) dist_mat <- dist(dt_hc[, .(x,y)]) hc <- hclust(dist_mat, method="complete") # We can cut the tree at some height to form k clusters clust <- cutree(hc, k=3) dt_hc[, cluster := factor(clust)] # Plot clusters ggplot(dt_hc, aes(x=x, y=y, color=cluster)) + geom_point(size=2) + labs(title="Hierarchical Clustering (complete linkage)", x="x", y="y") + theme_minimal()

# Dendrogram plot(hc, main="Dendrogram (Complete Linkage)") rect.hclust(hc, k=3, border="red")

Harmonic Mean - The harmonic mean of a set of positive numbers

- This measure is particularly useful when averaging rates or ratios.

- Compare with the arithmetic mean (the usual average), and other means (geometric, quadratic, etc.).

R demonstration (computing harmonic mean):

library(data.table) harmonic_mean <- function(x) { n <- length(x) n / sum(1/x) } dt_hm <- data.table(values = c(2, 3, 6, 6, 12)) my_hm <- harmonic_mean(dt_hm$values) my_hm## [1] 4Histogram - A histogram is a graphical representation of the distribution of numerical data. It groups data into bins (intervals) and displays the count or frequency within each bin, providing a quick visual of how values are spread.

It’s directly related to a distribution in statistics, visually summarising the frequency or relative frequency of data within specified intervals.

R demonstration (constructing a histogram):

library(data.table) library(ggplot2) set.seed(123) dt_hist <- data.table(x = rnorm(500, mean=10, sd=2)) ggplot(dt_hist, aes(x=x)) + geom_histogram(bins=30, fill="lightblue", colour="black") + labs(title="Histogram of Random Normal Data", x="Value", y="Count") + theme_minimal()

Hypothesis Testing - In statistics, hypothesis testing is a method to decide whether sample data support or refute a particular hypothesis about a population parameter or distribution.

Common steps:

- State the null hypothesis (

- Choose a significance level (

- Compute the p-value from sample data.

- Reject or fail to reject

R demonstration (example t-test):

library(data.table) set.seed(123) dt_ht <- data.table( groupA = rnorm(20, mean=5, sd=1), groupB = rnorm(20, mean=5.5, sd=1) ) # Let's do a two-sample t-test res <- t.test(dt_ht$groupA, dt_ht$groupB, var.equal=TRUE) res## ## Two Sample t-test ## ## data: dt_ht$groupA and dt_ht$groupB ## t = -1.0742, df = 38, p-value = 0.2895 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -0.8859110 0.2716729 ## sample estimates: ## mean of x mean of y ## 5.141624 5.448743- State the null hypothesis (

Induction - Mathematical induction is a proof technique used to show that a statement holds for all natural numbers. It involves two steps:

- Base Case: Prove the statement for the first natural number (often

- Inductive Step: Assume the statement holds for some

This relies on the well-ordering principle of the natural numbers.

Consider a simple example with arithmetic progressions:

- We may prove

No complicated R demonstration is typical here, but we can at least verify sums for a few values:

library(data.table) n_vals <- 1:10 dt_ind <- data.table( n = n_vals, sum_n = sapply(n_vals, function(k) sum(1:k)), formula = n_vals*(n_vals+1)/2 ) dt_ind## n sum_n formula ## <int> <int> <num> ## 1: 1 1 1 ## 2: 2 3 3 ## 3: 3 6 6 ## 4: 4 10 10 ## 5: 5 15 15 ## 6: 6 21 21 ## 7: 7 28 28 ## 8: 8 36 36 ## 9: 9 45 45 ## 10: 10 55 55- Base Case: Prove the statement for the first natural number (often

Interval - In analysis, an interval is a connected subset of the real-number-line. Common types of intervals include:

- Open interval:

- Closed interval:

- Half-open / half-closed:

Intervals are the building blocks of basic topology on the real line and are central in defining integrals, continuity, and other concepts of real analysis.

# Minimal R demonstration: we can define intervals simply with numeric vectors my_interval <- 0:5 # representing discrete steps from 0 to 5 my_interval## [1] 0 1 2 3 4 5- Open interval:

Integral - In calculus, an integral represents the accumulation of quantities or the area under a curve. It is the inverse operation to the derivative (by the Fundamental Theorem of Calculus).

For a function

Key points:

- Indefinite integral:

- Riemann sums approximate integrals by partitioning the interval and summing “area slices.”

R demonstration (numeric approximation of an integral via trapezoidal rule):

library(data.table) f <- function(x) x^2 a <- 0 b <- 3 n <- 100 x_vals <- seq(a, b, length.out=n+1) dx <- (b - a)/n trapezoid <- sum((f(x_vals[-1]) + f(x_vals[-(n+1)]))/2) * dx trapezoid # approximate integral of x^2 from 0 to 3 = 9## [1] 9.00045- Indefinite integral:

Injection - In functions (set theory), an injection (or one-to-one function) is a function

Key points:

- No two distinct elements in

- Contrasts with surjection (onto) and bijection (one-to-one and onto).

R demonstration (not typical, but we can check uniqueness in a numeric map):

library(data.table) f_injective <- function(x) x^2 # for integers, watch out for collisions at +/-x x_vals <- c(-2,-1,0,1,2) f_vals <- f_injective(x_vals) data.table(x=x_vals, f=f_vals)## x f ## <num> <num> ## 1: -2 4 ## 2: -1 1 ## 3: 0 0 ## 4: 1 1 ## 5: 2 4# Notice that x^2 is not injective over all integers (f(-2)=f(2)). # But restricted to nonnegative x, it can be injective.- No two distinct elements in

Identity Matrix - In linear algebra, the identity matrix

Key points:

- Its determinant is 1 for all

- Invertible matrices always have an identity matrix (the “unit” of their multiplicative structure).

R demonstration (creating identity matrices):

library(data.table) I2 <- diag(2) I3 <- diag(3) I2## [,1] [,2] ## [1,] 1 0 ## [2,] 0 1I3## [,1] [,2] [,3] ## [1,] 1 0 0 ## [2,] 0 1 0 ## [3,] 0 0 1Intersection - In set theory, the intersection of two sets

- Compare this with the union

- The empty set

No special R demonstration is typically needed, but we can illustrate a basic example using sets as vectors:

A <- c(1, 2, 3, 4) B <- c(3, 4, 5, 6) intersect(A, B) # yields 3,4## [1] 3 4- Compare this with the union

Jensen's Inequality - In analysis, Jensen’s inequality states that for a convex function

If

R demonstration (empirical illustration):

library(data.table) set.seed(123) X <- runif(1000, min=0, max=2) # random draws in [0,2] phi <- function(x) x^2 # a convex function mean_X <- mean(X) lhs <- phi(mean_X) rhs <- mean(phi(X)) lhs## [1] 0.9891408rhs## [1] 1.319398# Typically: lhs <= rhs (Jensen's inequality for convex phi)Jacobian - In multivariable calculus, the Jacobian of a vector function

is the- The determinant of this matrix (if

- It generalises the concept of the gradient (when

R demonstration (numerical approximation of a Jacobian):

library(data.table) f_xy <- function(x, y) c(x^2 + 3*y, 2*x + y^2) approx_jacobian <- function(f, x, y, h=1e-6) { # f should return a vector c(f1, f2, ...) # We'll approximate partial derivatives w.r.t x and y. f_at_xy <- f(x, y) # partial w.r.t x f_at_xplus <- f(x + h, y) df_dx <- (f_at_xplus - f_at_xy) / h # partial w.r.t y f_at_yplus <- f(x, y + h) df_dy <- (f_at_yplus - f_at_xy) / h rbind(df_dx, df_dy) } approx_jacobian(f_xy, 1, 2)## [,1] [,2] ## df_dx 2.000001 2.000000 ## df_dy 3.000000 4.000001- The determinant of this matrix (if

Julia Set - In complex dynamics, a Julia set is the boundary of points in the complex plane describing the behaviour of a complex function, often associated with the iteration of polynomials like

Key points:

- For each complex parameter

- The set often exhibits self-similarity and intricate boundaries.

R demonstration (simple iteration to classify points):

library(data.table) library(ggplot2) # We'll do a basic "escape-time" iteration for z^2 + c, with c = -0.8 + 0.156i c_val <- complex(real=-0.8, imaginary=0.156) n <- 400 x_seq <- seq(-1.5, 1.5, length.out=n) y_seq <- seq(-1.5, 1.5, length.out=n) max_iter <- 50 threshold <- 2 res <- data.table() for (ix in seq_along(x_seq)) { for (iy in seq_along(y_seq)) { z <- complex(real=x_seq[ix], imaginary=y_seq[iy]) iter <- 0 while(Mod(z) < threshold && iter < max_iter) { z <- z*z + c_val iter <- iter + 1 } res <- rbind( res, data.table( x = x_seq[ix], y = y_seq[iy], iteration = iter ) ) } } ggplot(res, aes(x=x, y=y, color=iteration)) + geom_point(shape=15, size=1) + scale_color_viridis_c() + coord_fixed() + labs( title="Simple Julia Set (z^2 + c)", x="Re(z)", y="Im(z)" ) + theme_minimal()

- For each complex parameter

Jordan Normal Form - In linear algebra, the Jordan normal form (or Jordan canonical form) of a matrix is a block diagonal matrix with Jordan blocks, each corresponding to an eigenvalue.

A Jordan block for an eigenvalue

The Jordan form classifies matrices up to similarity transformations and is critical in solving systems of linear differential equations and more.

R demonstration (no built-in base R function to compute Jordan form, but we can show a small example):

library(data.table) # Usually, packages like 'jord' or 'expm' might help. # We'll just illustrate a 2x2 Jordan block for eigenvalue 3: J <- matrix(c(3,1,0,3), 2, 2, byrow=TRUE) J## [,1] [,2] ## [1,] 3 1 ## [2,] 0 3Joint Distribution - In statistics, a joint distribution describes the probability distribution of two or more random variables simultaneously. If

- Joint pmf (discrete case):

- Joint pdf (continuous case):

It extends the idea of a single-variable distribution to multiple dimensions.

R demonstration (bivariate normal sampling):

library(MASS) # for mvrnorm library(data.table) library(ggplot2) Sigma <- matrix(c(1, 0.5, 0.5, 1), 2, 2) # Cov matrix mu <- c(0, 0) set.seed(123) dt_joint <- data.table( mvrnorm(n=1000, mu=mu, Sigma=Sigma) ) setnames(dt_joint, c("V1","V2"), c("X","Y")) # Plot joint distribution via scatter plot ggplot(dt_joint, aes(x=X, y=Y)) + geom_point(alpha=0.5) + labs( title="Bivariate Normal Scatter", x="X", y="Y" ) + theme_minimal()

- Joint pmf (discrete case):

Kolmogorov Complexity - In algorithmic information theory, Kolmogorov complexity of a string is the length of the shortest description (program) that can produce that string on a universal computer (like a universal Turing machine).

Key Points:

- Measures the “information content” of a string.

- Uncomputable in the general case (no algorithm can compute the exact Kolmogorov complexity for every string).

- Often used to reason about randomness and compressibility.

No direct R demonstration is typical, as computing or estimating Kolmogorov complexity is a deep problem, but we can reason about approximate compression lengths with standard compressors.

Kruskal's Algorithm - In graph theory, Kruskal's algorithm finds a minimum spanning tree (MST) of a weighted graph by:

- Sorting edges in order of increasing weight.

- Adding edges one by one to the MST, provided they do not form a cycle.

- Repeating until all vertices are connected or edges are exhausted.

This greedy approach ensures an MST if the graph is connected.

R demonstration (a small example with igraph):

library(igraph)## ## Attaching package: 'igraph'## The following objects are masked from 'package:stats': ## ## decompose, spectrum## The following object is masked from 'package:base': ## ## union# Create a weighted graph g <- graph(edges=c("A","B","B","C","A","C","C","D","B","D"), directed=FALSE)## Warning: `graph()` was deprecated in igraph 2.1.0. ## ℹ Please use `make_graph()` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.E(g)$weight <- c(2, 4, 5, 1, 3) # just some weights # Use built-in MST function that uses Kruskal internally mst_g <- mst(g) mst_g## IGRAPH d1aa2b3 UNW- 4 3 -- ## + attr: name (v/c), weight (e/n) ## + edges from d1aa2b3 (vertex names): ## [1] A--B C--D B--D# Let's plot plot(mst_g, vertex.color="lightblue", vertex.size=30, edge.label=E(mst_g)$weight)

Kernel - In linear algebra, the kernel (or null space) of a linear map

- If

- The rank-nullity theorem links the dimension of the kernel with the dimension of the image.

R demonstration (finding the kernel of a matrix):

library(data.table) A <- matrix(c(1,2,3, 2,4,6, 1,1,2), nrow=3, byrow=TRUE) # We can find the null space using MASS::Null library(MASS) kerA <- Null(A) # basis for the kernel kerA## [,1] ## [1,] -8.944272e-01 ## [2,] 4.472136e-01 ## [3,] -1.024712e-15- If

K-Nearest Neighbors (KNN) - A KNN classifier (or regressor) predicts the label (or value) of a new point

Mathematical form (for classification):

where

R demonstration (using

class::knnfor classification):library(class) library(data.table) library(ggplot2) set.seed(123) n <- 100 x1 <- runif(n, 0, 5) x2 <- runif(n, 0, 5) y <- ifelse(x1 + x2 + rnorm(n) > 5, "A","B") dt_knn <- data.table(x1=x1, x2=x2, y=as.factor(y)) # We'll do a train/test split train_idx <- sample(1:n, size=70) train <- dt_knn[train_idx] test <- dt_knn[-train_idx] train_x <- as.matrix(train[, .(x1,x2)]) train_y <- train$y test_x <- as.matrix(test[, .(x1,x2)]) true_y <- test$y pred_knn <- knn(train_x, test_x, cl=train_y, k=3) accuracy <- mean(pred_knn == true_y) accuracy## [1] 0.8333333# Plot classification boundary grid_x1 <- seq(0,5, by=0.1) grid_x2 <- seq(0,5, by=0.1) grid_data <- data.table(expand.grid(x1=grid_x1, x2=grid_x2)) grid_mat <- as.matrix(grid_data[,.(x1,x2)]) grid_data[, pred := knn(train_x, grid_mat, cl=train_y, k=3)] ggplot() + geom_tile(data=grid_data, aes(x=x1, y=x2, fill=pred), alpha=0.4) + geom_point(data=dt_knn, aes(x=x1, y=x2, color=y), size=2) + scale_fill_manual(values=c("A"="lightblue","B"="salmon")) + scale_color_manual(values=c("A"="blue","B"="red")) + labs(title="K-Nearest Neighbors (k=3)", x="x1", y="x2") + theme_minimal()

K-means - In cluster analysis, k-means is an algorithm that partitions

Algorithm Outline:

- Choose

- Assign each data point to its nearest centroid.

- Recompute centroids as the mean of points in each cluster.

- Repeat steps 2-3 until assignments stabilize or a maximum iteration count is reached.

K-means often assumes data in a continuous space and can leverage knowledge of the distribution of points to identify cluster structure.

R demonstration (basic example):

library(data.table) library(ggplot2) set.seed(123) dt_data <- data.table( x = rnorm(50, 5, 1), y = rnorm(50, 2, 1) ) # Add another cluster dt_data2 <- data.table( x = rnorm(50, 10, 1), y = rnorm(50, 7, 1) ) dt_full <- rbind(dt_data, dt_data2) # k-means with 2 clusters res_km <- kmeans(dt_full[, .(x, y)], centers=2) dt_full[, cluster := factor(res_km$cluster)] ggplot(dt_full, aes(x=x, y=y, color=cluster)) + geom_point() + labs( title="k-means Clustering (k=2)", x="X", y="Y" ) + theme_minimal()

- Choose

Kurtosis - In statistics, kurtosis measures the “tailedness” of a distribution. The standard formula for sample kurtosis (excess kurtosis) is often:

- High kurtosis: heavy tails, outliers are more frequent.

- Low kurtosis: light tails, fewer extreme outliers (relative to a normal distribution).

R demonstration:

library(data.table) library(e1071) # for kurtosis function set.seed(123) dt_kurt <- data.table( normal = rnorm(500, mean=0, sd=1), heavy_tail = rt(500, df=3) # t-dist with df=3, heavier tails ) k_norm <- e1071::kurtosis(dt_kurt$normal) k_heavy <- e1071::kurtosis(dt_kurt$heavy_tail) k_norm## [1] -0.05820728k_heavy## [1] 7.802004Laplace Transform - In calculus, the Laplace transform of a function

assuming the integral converges.

Key points:

- Simplifies solving ordinary differential equations by converting them into algebraic equations in the

- Inverse Laplace transform recovers

R demonstration (no base R function for Laplace transforms, but we can do numeric approximations or use external packages. We show a naive numeric approach for a simple function

library(data.table) f <- function(t) exp(-t) laplace_numeric <- function(f, s, upper=10, n=1000) { # naive numerical approach t_vals <- seq(0, upper, length.out=n) dt <- (upper - 0)/n sum( exp(-s * t_vals) * f(t_vals) ) * dt } s_test <- 2 approx_LT <- laplace_numeric(f, s_test, upper=10) approx_LT## [1] 0.338025# The exact Laplace transform of e^{-t} is 1/(s+1). For s=2 => 1/3 ~ 0.3333- Simplifies solving ordinary differential equations by converting them into algebraic equations in the

Laplacian - In multivariable calculus, the Laplacian of a scalar function

- In 2D:

- In 3D:

- The concept generalises to higher dimension.

- The Laplacian is crucial in PDEs like the heat equation and wave equation.

No direct R built-in for second partial derivatives numerically, but we can approximate:

library(data.table) f_xy <- function(x, y) x^2 + y^2 laplacian_approx <- function(f, x, y, h=1e-4) { # second partial w.r.t x f_xph <- f(x+h, y); f_xmh <- f(x-h, y); f_xyc <- f(x, y) d2f_dx2 <- (f_xph - 2*f_xyc + f_xmh)/(h^2) # second partial w.r.t y f_yph <- f(x, y+h); f_ymh <- f(x, y-h) d2f_dy2 <- (f_yph - 2*f_xyc + f_ymh)/(h^2) d2f_dx2 + d2f_dy2 } laplacian_approx(f_xy, 2, 3)## [1] 4# For f(x,y)= x^2 + y^2, exact Laplacian = 2 + 2 = 4- In 2D:

L'Hôpital's Rule - In calculus, L'Hôpital's rule is a result for evaluating certain indeterminate forms of limit expressions. If

produces indeterminate forms like

provided the latter limit exists. It relies on the concept of the derivative.

Simple R demonstration (symbolic approach would be used in a CAS, but we can do numeric checks):

library(data.table) f <- function(x) x^2 - 1 g <- function(x) x - 1 # Evaluate near x=1 to see 0/0 x_vals <- seq(0.9, 1.1, by=0.01) dt_lhop <- data.table( x = x_vals, f_x = f(x_vals), g_x = g(x_vals), ratio = f(x_vals)/g(x_vals) ) head(dt_lhop)## x f_x g_x ratio ## <num> <num> <num> <num> ## 1: 0.90 -0.1900 -0.10 1.90 ## 2: 0.91 -0.1719 -0.09 1.91 ## 3: 0.92 -0.1536 -0.08 1.92 ## 4: 0.93 -0.1351 -0.07 1.93 ## 5: 0.94 -0.1164 -0.06 1.94 ## 6: 0.95 -0.0975 -0.05 1.95We can see the ratio near x=1 is close to the ratio of derivatives at that point:

- f'(x) = 2x

- g'(x) = 1 So at x=1, ratio ~ 2(1)/1 = 2.

Limit - In calculus, a limit describes the value that a function (or sequence) “approaches” as the input (or index) moves toward some point. For a function

means that

Key role in:

- Defining the derivative:

- Defining continuity and integrals.

R demonstration (numeric approximation of a limit at a point):

library(data.table) f <- function(x) (x^2 - 1)/(x - 1) # Indeterminate at x=1, but simplifies to x+1 x_vals <- seq(0.9, 1.1, by=0.01) dt_lim <- data.table( x = x_vals, f_x = f(x_vals) ) dt_lim## x f_x ## <num> <num> ## 1: 0.90 1.90 ## 2: 0.91 1.91 ## 3: 0.92 1.92 ## 4: 0.93 1.93 ## 5: 0.94 1.94 ## 6: 0.95 1.95 ## 7: 0.96 1.96 ## 8: 0.97 1.97 ## 9: 0.98 1.98 ## 10: 0.99 1.99 ## 11: 1.00 NaN ## 12: 1.01 2.01 ## 13: 1.02 2.02 ## 14: 1.03 2.03 ## 15: 1.04 2.04 ## 16: 1.05 2.05 ## 17: 1.06 2.06 ## 18: 1.07 2.07 ## 19: 1.08 2.08 ## 20: 1.09 2.09 ## 21: 1.10 2.10 ## x f_x# As x -> 1, f(x)-> 2.- Defining the derivative:

LDA (Linear Discriminant Analysis) - A linear discriminant analysis technique for classification which finds a linear combination of features that best separates classes. It aims to maximise between-class variance over within-class variance.

Mathematical objective: Given classes

where

R demonstration (using

MASS::ldaon synthetic data):library(MASS) library(data.table) library(ggplot2) set.seed(123) n <- 50 x1_class1 <- rnorm(n, mean=2, sd=1) x2_class1 <- rnorm(n, mean=2, sd=1) x1_class2 <- rnorm(n, mean=-2, sd=1) x2_class2 <- rnorm(n, mean=-2, sd=1) dt_lda_ex <- data.table( x1 = c(x1_class1, x1_class2), x2 = c(x2_class1, x2_class2), y = factor(c(rep("Class1", n), rep("Class2", n))) ) fit_lda <- lda(y ~ x1 + x2, data=dt_lda_ex) fit_lda## Call: ## lda(y ~ x1 + x2, data = dt_lda_ex) ## ## Prior probabilities of groups: ## Class1 Class2 ## 0.5 0.5 ## ## Group means: ## x1 x2 ## Class1 2.034404 2.146408 ## Class2 -2.253900 -1.961193 ## ## Coefficients of linear discriminants: ## LD1 ## x1 -0.7461484 ## x2 -0.7780657# Project data onto LD1 proj <- predict(fit_lda) dt_proj <- cbind(dt_lda_ex, LD1=proj$x[,1]) ggplot(dt_proj, aes(x=LD1, fill=y)) + geom_histogram(alpha=0.6, position="identity") + labs(title="LDA Projection onto LD1", x="LD1", y="Count") + theme_minimal()## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

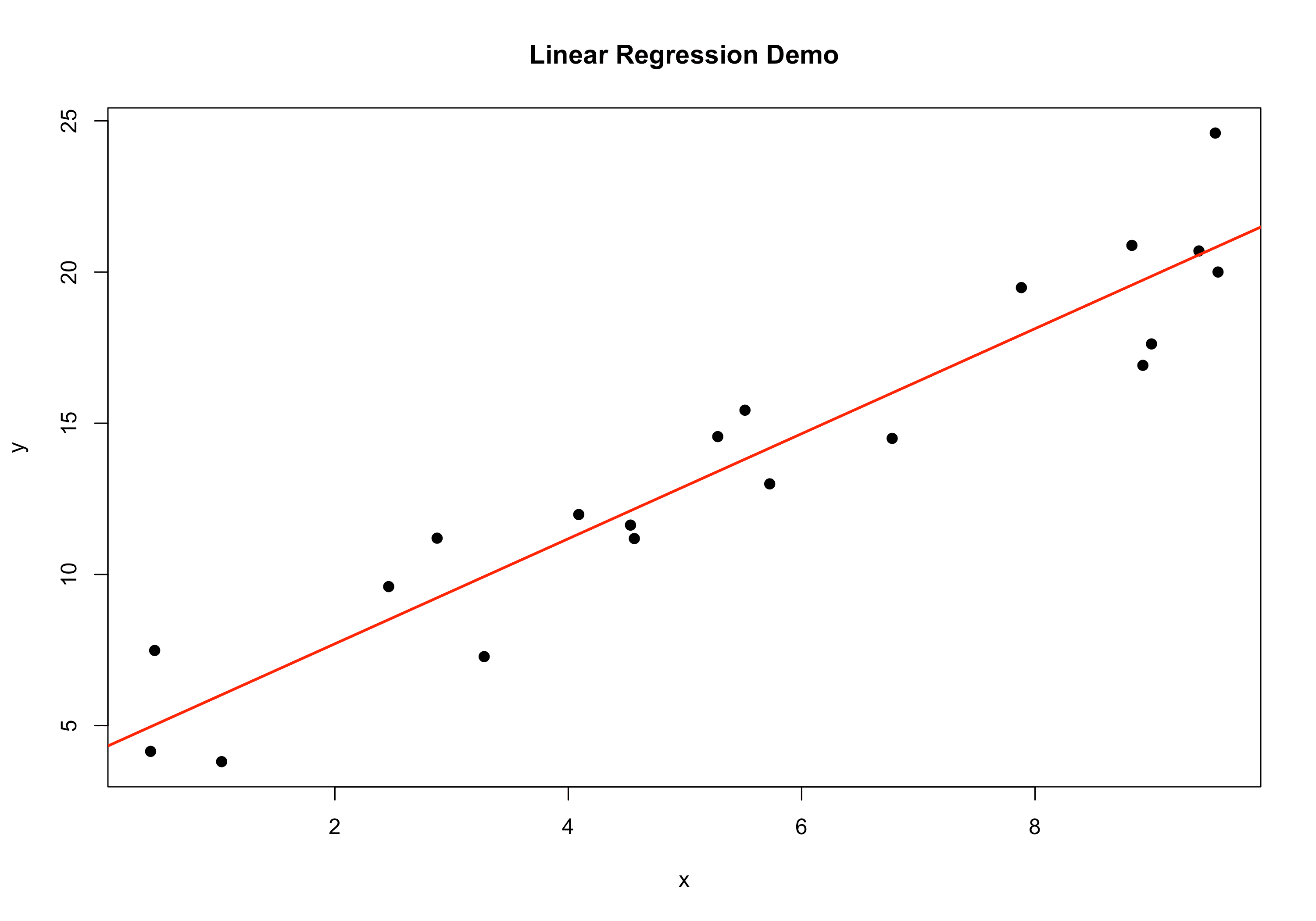

Linear Regression - In machine learning and statistics, linear regression models the relationship between a scalar response

Key points:

- Least squares estimates the coefficients

- The fitted line (or hyperplane in multiple dimensions) can be used for prediction and inference.

Mathematical formula: If we have data

We find

R demonstration (fitting a simple linear regression using base R):

library(data.table) set.seed(123) n <- 20 x <- runif(n, min=0, max=10) y <- 3 + 2*x + rnorm(n, mean=0, sd=2) # "true" slope=2, intercept=3 dt_lr <- data.table(x=x, y=y) fit <- lm(y ~ x, data=dt_lr) summary(fit)## ## Call: ## lm(formula = y ~ x, data = dt_lr) ## ## Residuals: ## Min 1Q Median 3Q Max ## -2.8189 -1.2640 -0.1737 1.3732 3.7852 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 4.2326 0.8766 4.828 0.000135 *** ## x 1.7370 0.1392 12.481 2.67e-10 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 1.902 on 18 degrees of freedom ## Multiple R-squared: 0.8964, Adjusted R-squared: 0.8907 ## F-statistic: 155.8 on 1 and 18 DF, p-value: 2.673e-10# Plot plot(y ~ x, data=dt_lr, pch=19, main="Linear Regression Demo") abline(fit, col="red", lwd=2)

- Least squares estimates the coefficients

LLM (Large Language Model) - A large language model is typically a Transformer-based or similarly advanced architecture with billions (or more) of parameters, trained on massive text corpora to generate coherent text or perform NLP tasks.

Key points:

- Uses self-attention to handle long contexts.

- Learns complex linguistic structures, can generate next tokens based on context.

Mathematical gist: At each token step, an LLM computes a probability distribution over the vocabulary:

where

R demonstration (We can show a mini example of text generation with

keras, but typically giant LLM training isn't feasible in R. We'll do conceptual snippet):# Conceptual only: library(data.table) cat("Training an LLM is typically done in Python with large GPU clusters.\nWe'll do a small toy example with a simple RNN or minimal next-token model.")## Training an LLM is typically done in Python with large GPU clusters. ## We'll do a small toy example with a simple RNN or minimal next-token model.Likelihood - In statistics, the likelihood function measures how well a given model parameter explains observed data. It’s similar to a distribution but viewed from the parameter’s perspective:

- For data

Key points:

- Maximum likelihood estimation chooses

- Log-likelihood is commonly used for convenience:

R demonstration (fitting a simple normal likelihood):

library(data.table) set.seed(123) x_data <- rnorm(50, mean=5, sd=2) lik_fun <- function(mu, sigma, x) { # Normal pdf for each x, product as likelihood # i.e. prod(dnorm(x, mean=mu, sd=sigma)) # We'll return negative log-likelihood for convenience -sum(dnorm(x, mean=mu, sd=sigma, log=TRUE)) } # We'll do a quick grid search mu_seq <- seq(4, 6, by=0.1) sigma_seq <- seq(1, 3, by=0.1) res <- data.table() for(m in mu_seq) { for(s in sigma_seq) { nll <- lik_fun(m, s, x_data) res <- rbind(res, data.table(mu=m, sigma=s, nll=nll)) } } res_min <- res[which.min(nll)] res_min## mu sigma nll ## <num> <num> <num> ## 1: 5.1 1.8 101.2725- For data

Monoid - In abstract algebra, a monoid is a semigroup with an identity element. Specifically, a set

- Associativity:

- Identity:

Key points:

- A group is a monoid where every element also has an inverse.

- Examples: Natural numbers under addition with identity 0, strings under concatenation with identity "" (empty string).

No direct R demonstration typical, but we can show a small "string monoid":

library(data.table) str_monoid_op <- function(a,b) paste0(a,b) # concatenation e <- "" # identity # Check associativity on a small example a<-"cat"; b<-"fish"; c<-"food" assoc_left <- str_monoid_op(str_monoid_op(a,b), c) assoc_right <- str_monoid_op(a, str_monoid_op(b,c)) data.table(assoc_left, assoc_right)## assoc_left assoc_right ## <char> <char> ## 1: catfishfood catfishfood- Associativity:

Matrix - A matrix is a rectangular array of numbers (or more abstract objects) arranged in rows and columns. Matrices are fundamental in determinant calculations, linear transformations, and a variety of applications:

Key operations:

- Addition and scalar multiplication (element-wise).

- Matrix multiplication.

- Transposition and inversion (if square and invertible).

R demonstration (basic matrix creation and operations):

library(data.table) A <- matrix(c(1,2,3,4,5,6), nrow=2, byrow=TRUE) B <- matrix(c(10,20,30,40,50,60), nrow=2, byrow=TRUE) A_plus_B <- A + B A_times_B <- A %*% t(B) # 2x3 %*% 3x2 => 2x2 A_plus_B## [,1] [,2] [,3] ## [1,] 11 22 33 ## [2,] 44 55 66A_times_B## [,1] [,2] ## [1,] 140 320 ## [2,] 320 770Markov Chain - In probability, a Markov chain is a stochastic-process with the Markov property: the next state depends only on the current state, not the history. Formally:

Key points:

- Transition probabilities can be arranged in a matrix for finite state spaces.

- Widely used in queueing, random walks, genetics, finance.

R demonstration (a simple Markov chain simulation):

library(data.table) # Transition matrix for states A,B P <- matrix(c(0.7, 0.3, 0.4, 0.6), nrow=2, byrow=TRUE) rownames(P) <- colnames(P) <- c("A","B") simulate_markov <- function(P, n=10, start="A") { states <- rownames(P) chain <- character(n) chain[1] <- start for(i in 2:n) { current <- chain[i-1] idx <- which(states==current) chain[i] <- sample(states, 1, prob=P[idx,]) } chain } chain_res <- simulate_markov(P, n=15, start="A") chain_res## [1] "A" "A" "A" "B" "B" "B" "A" "B" "A" "A" "B" "A" "A" "A" "A"Mutually Exclusive Events - In probability, two events

In other words,

since

R demonstration: no direct R function, but we can illustrate logic:

# Suppose events are flipping a coin: # A = heads, B = tails # A and B are mutually exclusive. # We can do a small simulation set.seed(123) flips <- sample(c("H","T"), size=100, replace=TRUE) mean(flips == "H") # approximate P(A)## [1] 0.57mean(flips == "T") # approximate P(B)## [1] 0.43# Overlap: none, because a single flip can't be both H and TMean - In statistics, the mean (or average) of a set of values

This is the arithmetic mean. Compare to the harmonic-mean or geometric mean for other contexts. The mean is often used to summarise a distribution.

R demonstration:

library(data.table) dt_values <- data.table(val = c(2,3,5,7,11)) mean_val <- mean(dt_values$val) mean_val## [1] 5.6Median - In statistics, the median is the value separating the higher half from the lower half of a distribution. For an ordered dataset of size

- If

- If

R demonstration:

library(data.table) dt_vals <- data.table(val = c(2,3,7,9,11)) med_val <- median(dt_vals$val) med_val## [1] 7- If

Mode - In statistics, the mode is the most frequently occurring value in a distribution. Some distributions (e.g., uniform) may have multiple modes (or no strong mode) if all values are equally likely.

R demonstration (custom function):

library(data.table) mode_fn <- function(x) { # returns the value(s) with highest frequency tab <- table(x) freq_max <- max(tab) as.numeric(names(tab)[tab == freq_max]) } dt_data <- data.table(vals = c(1,2,2,3,2,5,5,5)) mode_fn(dt_data$vals)## [1] 2 5Manifold - In topology and differential geometry, a manifold is a topological-space that locally resembles Euclidean space. Formally, an

Key points:

- The concept of dimension is central: a 2D manifold locally looks like a plane, a 3D manifold like space, etc.

- Smooth manifolds allow calculus-like operations on them.

No direct R demonstration, but we can illustrate how to store a “chart” or local coordinate system conceptually:

library(data.table) cat("Manifolds are an advanced concept. In R, we'd handle geometry libraries for numeric solutions.")## Manifolds are an advanced concept. In R, we'd handle geometry libraries for numeric solutions.Nested Radical - A nested radical is an expression containing radicals (square roots, etc.) inside other radicals, for example:

Such expressions sometimes simplify to closed-forms. A famous example is:

Though symbolic manipulation is more typical than numeric for these. Minimal R demonstration here:

# We could approximate a short nested radical numerically: nested_radical_approx <- function(n) { # approximate: sqrt(1 + 2*sqrt(1 + 3*sqrt(1+... up to n steps # This is more a demonstration than a standard function val <- 0 for(k in seq(n, 2, by=-1)) { val <- sqrt(1 + k*val) } sqrt(1 + 2*val) # final } nested_radical_approx(5)## [1] 2.473795Number Line - The number line (real line) is a straight line on which every real number corresponds to a unique point. Basic structures like an interval are subsets of the number line:

- Negative numbers extend to the left, positive numbers to the right.

- Zero is typically placed at the origin.

No direct R demonstration is typical, but we can illustrate numeric representations:

library(data.table) vals <- seq(-3, 3, by=1) vals## [1] -3 -2 -1 0 1 2 3Non-Euclidean Geometry - In geometry, non-Euclidean geometry refers to either hyperbolic or elliptic geometry (or others) that reject or modify Euclid’s fifth postulate (the parallel postulate).

Key points:

- Hyperbolic geometry: infinite lines diverge more rapidly, sums of angles in triangles are < 180°.

- Elliptic geometry: lines “curve,” angles in triangles sum to > 180°.

No standard R demonstration, but we might explore transformations or plots for illustrative geometry.

# No direct numeric example, but let's just place a note: cat("No direct numeric example for non-Euclidean geometry in base R. Consider specialized geometry packages or external tools.")## No direct numeric example for non-Euclidean geometry in base R. Consider specialized geometry packages or external tools.Naive Bayes - In machine learning, Naive Bayes is a probabilistic classifier applying Bayes' theorem with a “naive” (independence) assumption among features given the class. For a class

Key points:

- Independence assumption simplifies computation of

- Effective in text classification (bag-of-words assumption).

R demonstration (using

e1071::naiveBayeson synthetic data):library(e1071) library(data.table) library(ggplot2) set.seed(123) n <- 100 x1 <- rnorm(n, mean=2, sd=1) x2 <- rnorm(n, mean=-1, sd=1) cl1 <- data.table(x1, x2, y="Class1") x1 <- rnorm(n, mean=-2, sd=1) x2 <- rnorm(n, mean=2, sd=1) cl2 <- data.table(x1, x2, y="Class2") dt_nb <- rbind(cl1, cl2) fit_nb <- naiveBayes(y ~ x1 + x2, data=dt_nb) fit_nb## ## Naive Bayes Classifier for Discrete Predictors ## ## Call: ## naiveBayes.default(x = X, y = Y, laplace = laplace) ## ## A-priori probabilities: ## Y ## Class1 Class2 ## 0.5 0.5 ## ## Conditional probabilities: ## x1 ## Y [,1] [,2] ## Class1 2.090406 0.9128159 ## Class2 -1.879535 0.9498790 ## ## x2 ## Y [,1] [,2] ## Class1 -1.107547 0.9669866 ## Class2 1.963777 1.0387812# Predict grid_x1 <- seq(-5,5, by=0.2) grid_x2 <- seq(-5,5, by=0.2) grid_data <- data.table(expand.grid(x1=grid_x1, x2=grid_x2)) grid_data[, pred := predict(fit_nb, newdata=.SD)] ggplot() + geom_tile(data=grid_data, aes(x=x1, y=x2, fill=pred), alpha=0.4) + geom_point(data=dt_nb, aes(x=x1, y=x2, color=y), size=2) + scale_fill_manual(values=c("Class1"="lightblue","Class2"="salmon")) + scale_color_manual(values=c("Class1"="blue","Class2"="red")) + labs(title="Naive Bayes Classification", x="x1", y="x2") + theme_minimal()

- Independence assumption simplifies computation of

Neural Network - In machine learning, a neural network is a collection of connected units (neurons) arranged in layers. Each neuron computes a weighted sum of inputs, applies an activation function

Key points:

- A typical feed-forward network with one hidden layer might compute: '60196' z_1^1 = \sigma( W_1 x + b_1), \quad z_2^2 = \sigma( W_2 z_1^1 + b_2 ), '60196'

- Training uses gradient-based optimisation (see gradient) (e.g., backpropagation) to adjust weights.

R demonstration (a small neural network using

nnetpackage):library(data.table) library(nnet) set.seed(123) n <- 50 x <- runif(n, min=0, max=2*pi) y <- sin(x) + rnorm(n, sd=0.1) dt_nn <- data.table(x=x, y=y) # Fit a small single-hidden-layer neural network fit_nn <- nnet(y ~ x, data=dt_nn, size=5, linout=TRUE, trace=FALSE) # Predictions newx <- seq(0,2*pi,length.out=100) pred_y <- predict(fit_nn, newdata=data.table(x=newx)) plot(dt_nn, dt_nn, main="Neural Network Demo", pch=19) lines(newx, sin(newx), col="blue", lwd=2, lty=2) # true function lines(newx, pred_y, col="red", lwd=2) # NN approximation

Normal Distribution - In statistics, the normal distribution (or Gaussian) is a continuous probability distribution with probability density function:

where '56956'\mu'56956' is the mean and '56956'\sigma^2'56956' is the variance.

Key points:

- Symmetric, bell-shaped curve.

- Many natural phenomena approximate normality by Central Limit Theorem arguments.

R demonstration:

library(data.table) library(ggplot2) set.seed(123) dt_norm <- data.table(x = rnorm(1000, mean=5, sd=2)) ggplot(dt_norm, aes(x=x)) + geom_histogram(bins=30, fill="lightblue", color="black", aes(y=..density..)) + geom_density(color="red", size=1) + labs( title="Normal Distribution Example", x="Value", y="Density" ) + theme_minimal()## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0. ## ℹ Please use `linewidth` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.## Warning: The dot-dot notation (`..density..`) was deprecated in ggplot2 3.4.0. ## ℹ Please use `after_stat(density)` instead. ## This warning is displayed once every 8 hours. ## Call `lifecycle::last_lifecycle_warnings()` to see where this warning ## was generated.

Null Hypothesis - In statistics, the null hypothesis (commonly denoted

Key points:

- We either “reject

- The alternative hypothesis

R demonstration (t-test example, focusing on

library(data.table) set.seed(123) dt_null <- data.table( groupA = rnorm(20, mean=5, sd=1), groupB = rnorm(20, mean=5.2, sd=1) ) t_res <- t.test(dt_null$groupA, dt_null$groupB, var.equal=TRUE) t_res## ## Two Sample t-test ## ## data: dt_null$groupA and dt_null$groupB ## t = -0.0249, df = 38, p-value = 0.9803 ## alternative hypothesis: true difference in means is not equal to 0 ## 95 percent confidence interval: ## -0.5859110 0.5716729 ## sample estimates: ## mean of x mean of y ## 5.141624 5.148743- We either “reject

Odd Function - A function

for all x in the domain. Graphically, odd functions exhibit symmetry about the origin. Classic examples include

R demonstration (simple numeric check for an odd function

library(data.table) x_vals <- seq(-3, 3, by=1) dt_odd <- data.table( x = x_vals, f_x = x_vals^3, f_negx = (-x_vals)^3 ) # We expect f_negx to be -f_x if the function is truly odd. dt_odd## x f_x f_negx ## <num> <num> <num> ## 1: -3 -27 27 ## 2: -2 -8 8 ## 3: -1 -1 1 ## 4: 0 0 0 ## 5: 1 1 -1 ## 6: 2 8 -8 ## 7: 3 27 -27One-Hot Encoding - In data science and machine learning, one-hot encoding is a method to transform categorical variables into numeric arrays with only one “active” position. For example, a feature “colour” with possible values (red, green, blue) might become:

- red: (1, 0, 0)

- green: (0, 1, 0)

- blue: (0, 0, 1)

R demonstration (converting a factor to dummy variables):

library(data.table) library(ggplot2) dt_oh <- data.table(colour = c("red", "blue", "green", "green", "red")) dt_oh[, colour := factor(colour)] # We'll create dummy variables manually for(lvl in levels(dt_oh$colour)) { dt_oh[[paste0("is_", lvl)]] <- ifelse(dt_oh$colour == lvl, 1, 0) } dt_oh## colour is_blue is_green is_red ## <fctr> <num> <num> <num> ## 1: red 0 0 1 ## 2: blue 1 0 0 ## 3: green 0 1 0 ## 4: green 0 1 0 ## 5: red 0 0 1Orthogonal - In linear algebra, vectors (or subspaces) are orthogonal if their dot product is zero. A set of vectors is orthogonal if every pair of distinct vectors in the set is orthogonal. A matrix is an orthogonal matrix if

Key points:

- Orthogonality generalises the concept of perpendicularity in higher dimensions.

- Orthogonal transformations preserve lengths and angles.

R demonstration (check if a matrix is orthogonal):

library(data.table) Q <- matrix(c(0,1, -1,0), nrow=2, byrow=TRUE) # Q^T Q test_orth <- t(Q) %*% Q test_orth## [,1] [,2] ## [1,] 1 0 ## [2,] 0 1# If Q is orthogonal, test_orth should be the 2x2 identity.Order Statistic - In statistics, an order statistic is one of the values in a sorted sample. Given

Key points:

- Distribution of order statistics helps in confidence intervals, extreme value theory.

- The minimum is the 1st order statistic, the maximum is the

R demonstration:

library(data.table) set.seed(123) x_vals <- sample(1:100, 10) dt_ord <- data.table(x = x_vals) dt_ord_sorted <- dt_ord[order(x)] dt_ord_sorted[, idx := .I] # .I is row index in data.table dt_ord_sorted## x idx ## <int> <int> ## 1: 14 1 ## 2: 25 2 ## 3: 31 3 ## 4: 42 4 ## 5: 43 5 ## 6: 50 6 ## 7: 51 7 ## 8: 67 8 ## 9: 79 9 ## 10: 97 10Outlier - In statistics, an outlier is a data point significantly distant from the rest of the distribution. Outliers can arise from measurement errors, heavy-tailed distributions, or genuine extreme events.

Key points:

- Outliers can skew means, inflate variances, or distort analyses.

- Detection methods include IQR-based rules, z-scores, or robust statistics.

R demonstration (basic detection via boxplot stats):

library(data.table) library(ggplot2) set.seed(123) dt_out <- data.table(x = c(rnorm(30, mean=10, sd=1), 25)) # one extreme outlier ggplot(dt_out, aes(y=x)) + geom_boxplot(fill="lightblue") + theme_minimal()

stats <- boxplot.stats(dt_out$x) stats$out## [1] 25Partial Derivative - In multivariable calculus, a partial derivative of a function

with respect to

Key points:

- Used in computing the gradient.

- The concept generalises derivative to higher dimensions.

R demonstration (numerical approximation for

library(data.table) f_xy <- function(x,y) x^2 + 2*x*y partial_x <- function(f, x, y, h=1e-6) { (f(x+h, y) - f(x, y)) / h } val <- partial_x(f_xy, 2, 3) val## [1] 10# Compare to analytic partial derivative wrt x: 2x + 2y. # At (2,3) => 2*2 + 2*3=4+6=10Permutation - In combinatorics, a permutation is an arrangement of all or part of a set of objects in a specific order. For

Compare with a combination, where order does not matter.

R demonstration (simple function for permutation count):

library(data.table) perm_func <- function(n,k) factorial(n)/factorial(n-k) perm_5_3 <- perm_func(5,3) perm_5_3## [1] 60# which is 5!/2! = 60PPO (Proximal Policy Optimization) - An advanced reinforcement learning algorithm by OpenAI, improving policy gradient methods by controlling how far the new policy can deviate from the old policy. The objective uses a clipped surrogate function:

where:

Key points:

- Prevents large policy updates that break old policy.

- Often combined with a value function critic for advantage estimation.

R demonstration (No standard PPO in base R, but let's conceptually illustrate partial code with

rlang? We'll do a simplified snippet):library(data.table) set.seed(123) cat("Implementing PPO in pure R is possible but quite complex. We'll pseudo-code a single update step:")## Implementing PPO in pure R is possible but quite complex. We'll pseudo-code a single update step:ppo_update_step <- function(log_prob_old, log_prob_new, advantage, epsilon=0.2) { ratio <- exp(log_prob_new - log_prob_old) unclipped <- ratio * advantage clipped <- pmax(pmin(ratio, 1+epsilon), 1-epsilon)* advantage # Surrogate objective obj <- mean(pmin(unclipped, clipped)) obj } log_prob_old <- rnorm(10, mean=-1) log_prob_new <- log_prob_old + rnorm(10, mean=0, sd=0.1) advantage <- rnorm(10, mean=1, sd=0.5) ppo_update_step(log_prob_old, log_prob_new, advantage, 0.2)## [1] 0.7827393Percentile - In statistics, a percentile is a measure used to indicate the value below which a given percentage of observations in a group of observations falls. For example, the 50th percentile is the median.

Key points:

- Commonly used in test scores, growth charts, and any context where relative standing is measured.

- The distribution of data helps interpret percentile rank.

R demonstration (finding percentiles via

quantile):library(data.table) set.seed(123) dt_p <- data.table(vals = rnorm(100, mean=0, sd=1)) quantile(dt_p$vals, probs=c(0.25, 0.5, 0.75))## 25% 50% 75% ## -0.49385424 0.06175631 0.69181917Poisson Distribution - In probability, the Poisson distribution is a discrete distribution describing the probability of a number of events occurring in a fixed interval, given the events occur with a known average rate (the mean) and independently of the time since the last event.

Its pmf for

where '57323'\lambda'57323' is both the mean and the variance of the distribution.

R demonstration:

library(data.table) library(ggplot2) lambda_val <- 4 dt_pois <- data.table(k = 0:15) dt_pois[, prob := dpois(k, lambda_val)] ggplot(dt_pois, aes(x=factor(k), y=prob)) + geom_col(fill="lightblue", color="black") + labs( title=paste("Poisson distribution with lambda =", lambda_val), x="k", y="Probability" ) + theme_minimal()

Proportion - In statistics, a proportion represents a fraction of the whole—essentially, how many observations fall into a particular category, divided by the total.

Key points:

- Used in categorical data analysis.

- Confidence intervals for a proportion use techniques like the Wald method, Wilson method, etc.

R demonstration (simple proportion of “heads” in coin flips):

library(data.table) set.seed(123) flips <- sample(c("H","T"), 50, replace=TRUE) prop_heads <- mean(flips=="H") prop_heads## [1] 0.6Quadratic - A quadratic function is a polynomial of degree 2, often written as:

with

R demonstration:

library(data.table) x_vals <- seq(-5,5, by=0.5) f_quad <- function(x) 2*x^2 + 3*x - 1 dt_quad <- data.table( x = x_vals, y = f_quad(x_vals) ) head(dt_quad)## x y ## <num> <num> ## 1: -5.0 34 ## 2: -4.5 26 ## 3: -4.0 19 ## 4: -3.5 13 ## 5: -3.0 8 ## 6: -2.5 4Quartic - A quartic function (or bi-quadratic) is a polynomial of degree 4:

where

R demonstration (plotting a quartic):

library(data.table) library(ggplot2) f_quartic <- function(x) x^4 - 2*x^3 + 3*x + 1 x_vals <- seq(-3,3, by=0.1) dt_qr <- data.table( x = x_vals, y = f_quartic(x_vals) ) ggplot(dt_qr, aes(x=x, y=y)) + geom_line(color="blue") + labs( title="Example Quartic: x^4 - 2x^3 + 3x + 1", x="x", y="f(x)" ) + theme_minimal()

Quaternion - In algebra, a quaternion is a hypercomplex number of the form

where

Key points:

- Noncommutative:

- Used in 3D rotations (e.g., in computer graphics, robotics).

No direct base R demonstration of quaternions, but certain libraries handle them. Let’s just illustrate we can store them as a list:

library(data.table) # We'll store quaternions as lists, no direct operation q1 <- list(a=1, b=2, c=3, d=-1) q1## $a ## [1] 1 ## ## $b ## [1] 2 ## ## $c ## [1] 3 ## ## $d ## [1] -1- Noncommutative:

Q-Learning - A reinforcement learning algorithm that learns a value function

where:

Key points:

- Model-free: no prior knowledge of environment dynamics is needed.

- A type of Markov-chain approach if states follow Markov property.

R demonstration (mini example of a gridworld Q-learning approach, conceptual code only):

library(data.table) # We'll define a small 1D environment: states 1..5, with 5 as terminal Q <- matrix(0, nrow=5, ncol=2, dimnames=list(1:5, c("left","right"))) alpha <- 0.1 gamma <- 0.9 simulate_episode <- function() { s <- 3 # start in the middle total_reward <- 0 while(s != 1 && s != 5) { # pick an action a <- sample(c("left","right"),1) s_new <- if(a=="left") s-1 else s+1 r <- if(s_new==5) 10 else 0 # Q update Q[s,a] <<- Q[s,a] + alpha*(r + gamma*max(Q[s_new,]) - Q[s,a]) s <- s_new total_reward <- total_reward + r } total_reward } # run a few episodes for(i in 1:100){ simulate_episode() } Q## left right ## 1 0.000000 0.000000 ## 2 0.000000 6.151484 ## 3 5.053238 8.910591 ## 4 7.515370 9.953616 ## 5 0.000000 0.000000Queueing Theory - In probability and operations research, queueing theory studies the behaviour of waiting lines or queues. Models often involve a Poisson arrival distribution and exponential service times, e.g., the M/M/1 queue.

Key points:

- Performance measures: average waiting time, queue length, server utilisation.

- Widely applied in telecommunications, computer networks, and service systems.

R demonstration (simulation of a simple queue, optional approaches exist but we show a conceptual snippet):

library(data.table) set.seed(123) # We'll simulate interarrival times with rexp(rate=lambda), # and service times with rexp(rate=mu). simulate_queue <- function(n_customers, lambda=1, mu=1) { # generate interarrival times inter_arr <- rexp(n_customers, rate=lambda) arrival_times <- cumsum(inter_arr) service_times <- rexp(n_customers, rate=mu) # track when each customer starts service start_service <- numeric(n_customers) finish_service <- numeric(n_customers) for(i in seq_len(n_customers)) { if(i==1) { start_service[i] <- arrival_times[i] } else { start_service[i] <- max(arrival_times[i], finish_service[i-1]) } finish_service[i] <- start_service[i] + service_times[i] } data.table( customer = 1:n_customers, arrival = arrival_times, start = start_service, finish = finish_service, wait = start_service - arrival_times ) } dt_queue <- simulate_queue(10, lambda=1, mu=2) dt_queue## customer arrival start finish wait ## <int> <num> <num> <num> <num> ## 1: 1 0.8434573 0.8434573 1.345872 0.0000000 ## 2: 2 1.4200675 1.4200675 1.660175 0.0000000 ## 3: 3 2.7491224 2.7491224 2.889629 0.0000000 ## 4: 4 2.7806998 2.8896292 3.078188 0.1089295 ## 5: 5 2.8369107 3.0781881 3.172330 0.2412774 ## 6: 6 3.1534120 3.1723301 3.597223 0.0189182 ## 7: 7 3.4676392 3.5972232 4.378825 0.1295840 ## 8: 8 3.6129060 4.3788250 4.618205 0.7659189 ## 9: 9 6.3391425 6.3391425 6.634610 0.0000000 ## 10: 10 6.3682960 6.6346099 8.655116 0.2663140Quartile - In statistics, a quartile is a special case of a percentile that divides the data into four equal parts. The second quartile (Q2) is the median. The first quartile (Q1) and third quartile (Q3) frame the interquartile range (IQR).